The iCub multisensory datasets for robot and computer vision tasks

This page hosts novel datasets constructed by the iCub robot equipped with an additional depth sensor and color camera, IntelRealSense 435i. We employed the iCub robot to acquire color and depth information for 210 objects in different acquisition scenarios. These datasets can be used for robot and computer vision applications: multisensory object representation, object concept formation, action recognition, affordance learning, rotation, and distance-invariant object recognition. Note that this is an ongoing work, and the content will be updated regularly.

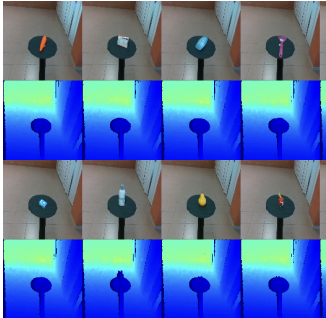

RGB-D turntable dataset. The experiment setup for this dataset consists of a motorized turntable and the iCub robot. To capture color and depth images, we put the objects on the turntable, and then the table was rotated by five degrees till it completed a full rotation. At the end of this experiment, we obtained 72 different views of an object, and for each view, we collected a depth and 3 color images (60480 color and depth images), for a total of 288 images per object.

RGB-D turntable dataset. The experiment setup for this dataset consists of a motorized turntable and the iCub robot. To capture color and depth images, we put the objects on the turntable, and then the table was rotated by five degrees till it completed a full rotation. At the end of this experiment, we obtained 72 different views of an object, and for each view, we collected a depth and 3 color images (60480 color and depth images), for a total of 288 images per object.

Link to download dataset: https://box.hu-berlin.de/d/6f7b371ea38744a1bcf3

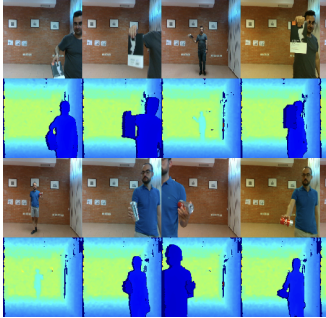

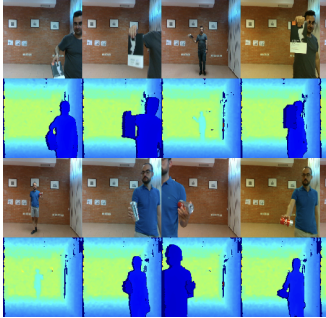

RGB-D Operator dataset. The experiment setup for this dataset is similar to the turndable dataset. Here, instead of a turntable, we employed four different operator to obtain more different views of the object in three rotational axes, instead of a single one, with more poses, and under noisier environmental conditions that introduced factors such as specularities, shadows,etc. Link to download dataset: Note that the size of this dataset exceed the limit of the cloud storage that I have. But I will share the dataset by partitioning it.

RGB-D Operator dataset. The experiment setup for this dataset is similar to the turndable dataset. Here, instead of a turntable, we employed four different operator to obtain more different views of the object in three rotational axes, instead of a single one, with more poses, and under noisier environmental conditions that introduced factors such as specularities, shadows,etc. Link to download dataset: Note that the size of this dataset exceed the limit of the cloud storage that I have. But I will share the dataset by partitioning it.

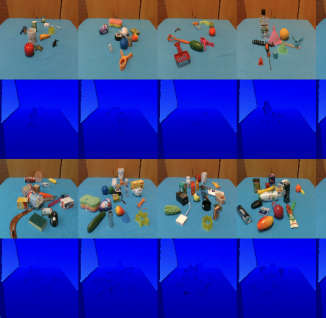

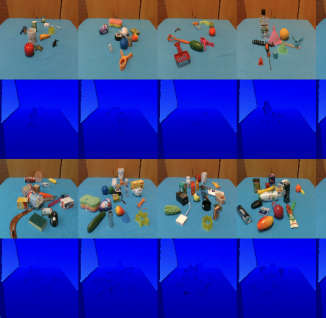

Scene understanding dataset(s). In this setup, we constructed two datasets to perform indoor scene understanding by creating a cluttered scene with 10 and 20 objects. In the first setup, we put 10 objects in front of the robot to capture depth and color images. After obtaining color and depth data of the scene, we randomly shuffled objects’ poses 20 times to capture more variations. This procedure was repeated for all objects in the dataset. To this end, we created 21 different scenes with 10 objects, and we collected 1680 depth and color images. In the second setup, we replicated the same procedure with 20 objects, and we shuffled these objects in the scene 40 times, creating a dataset with 1760 color and depth images. We collected 3340 color and depth images by combining the data from the first and second experiments.

Scene understanding dataset(s). In this setup, we constructed two datasets to perform indoor scene understanding by creating a cluttered scene with 10 and 20 objects. In the first setup, we put 10 objects in front of the robot to capture depth and color images. After obtaining color and depth data of the scene, we randomly shuffled objects’ poses 20 times to capture more variations. This procedure was repeated for all objects in the dataset. To this end, we created 21 different scenes with 10 objects, and we collected 1680 depth and color images. In the second setup, we replicated the same procedure with 20 objects, and we shuffled these objects in the scene 40 times, creating a dataset with 1760 color and depth images. We collected 3340 color and depth images by combining the data from the first and second experiments.

Link to download dataset: https://box.hu-berlin.de/d/8cf5d696d4d5497180d2/

Action recognition dataset. We designed a setup where the iCub robot becomes an action-observer, and four different operators have the role of being action- performers. The operators use four different tools to perform four different actions of 20 objects. In this setting, the color and depth images were captured before and after performing an action on an object with a tool.

Link to download dataset: https://box.hu-berlin.de/d/6bc742f6dafb4dc2a36a/

Objects and data format specifications

- The color and depth images in these dataset were captured with the size of 640×480 and saved in PNG format. Note that the depth data were visualized by using OpenCV (cv2.COLORMAP_JET) and saved as an image.

- Objects: As can be seen from the banner of the web page, the datasets were constructed with various types of objects. Note that we used the same objects for the RGB-D turntable, RGBD-operator, and scene understanding datasets. Here, you can access the complete list of object with corresponding object id. The action recognition dataset constructed with 20 objects and the list of object can be found in Here.

Publications

- ``iCub! Do you recognize what I am doing?”: multimodal human action recognition on multisensory-enabled iCub Robot. Kas Kniesmeijer, Murat Kirtay, Workshop on Adaptive Social Interaction based on user's Mental Models and behavior in HRI in 14th International Conference on Social Robotics.

- Murat Kirtay, Ugo Albanese, Lorenzo Vannucci, Guido Schillaci, Cecilia Laschi, and Egidio Falotico. 2020. The iCub multisensor datasets for robot and computer vision applications. In Proceedings of the 2020 International Conference on Multimodal Interaction (ICMI’20), October 25–29, 2020, Virtual event, Netherlands.

- A Multisensory Learning Architecture for Rotation-invariant Object Recognition, Murat Kirtay, Guido Schillaci, Verena V. Hafner, arXiv 2009.06292, (2020).

Theses

These dataset were used by MSc. and BSc. students for thesis projects.

- BSc in Cognitive Science and Artificial Intelligence

- A deep learning approach on fusion technique comparison applied to affordance classification, Christophe Friezas Gonçalves

- MSc in Data Science and Society

- Unsupervised representation learning for human action and affordance recognition, Franklin Willemen

- Deep reinforcement learning for object classification, Evelyn Pomasqui

- Ensemble learning to recognize human actions using the iCub robot multimodal dataset, Kas Kniesmeijer

- The next member of your household might be a robot: A multimodal deep learning approach in every-day object recognition developed for robot implementation, Tom Weerts

- Deep learning-based object recognition model for humanoid robot, Kees Wagemans

- Deep learning for classifying objects using the iCub robot dataset, Shifrah Goldblatt

Citing the datates

Murat Kirtay, Ugo Albanese, Lorenzo Vannucci, Guido Schillaci, Cecilia Laschi, and Egidio Falotico. 2020. The iCub multisensor datasets for robot and computer vision applications. In Proceedings of the 2020 International Conference on Multimodal Interaction (ICMI’20), October 25–29, 2020, Virtual event, Netherlands.

RGB-D turntable dataset. The experiment setup for this dataset consists of a motorized turntable and the iCub robot. To capture color and depth images, we put the objects on the turntable, and then the table was rotated by five degrees till it completed a full rotation. At the end of this experiment, we obtained 72 different views of an object, and for each view, we collected a depth and 3 color images (60480 color and depth images), for a total of 288 images per object.

RGB-D turntable dataset. The experiment setup for this dataset consists of a motorized turntable and the iCub robot. To capture color and depth images, we put the objects on the turntable, and then the table was rotated by five degrees till it completed a full rotation. At the end of this experiment, we obtained 72 different views of an object, and for each view, we collected a depth and 3 color images (60480 color and depth images), for a total of 288 images per object.  RGB-D Operator dataset. The experiment setup for this dataset is similar to the turndable dataset. Here, instead of a turntable, we employed four different operator to obtain more different views of the object in three rotational axes, instead of a single one, with more poses, and under noisier environmental conditions that introduced factors such as specularities, shadows,etc. Link to download dataset: Note that the size of this dataset exceed the limit of the cloud storage that I have. But I will share the dataset by partitioning it.

RGB-D Operator dataset. The experiment setup for this dataset is similar to the turndable dataset. Here, instead of a turntable, we employed four different operator to obtain more different views of the object in three rotational axes, instead of a single one, with more poses, and under noisier environmental conditions that introduced factors such as specularities, shadows,etc. Link to download dataset: Note that the size of this dataset exceed the limit of the cloud storage that I have. But I will share the dataset by partitioning it.

Scene understanding dataset(s). In this setup, we constructed two datasets to perform indoor scene understanding by creating a cluttered scene with 10 and 20 objects. In the first setup, we put 10 objects in front of the robot to capture depth and color images. After obtaining color and depth data of the scene, we randomly shuffled objects’ poses 20 times to capture more variations. This procedure was repeated for all objects in the dataset. To this end, we created 21 different scenes with 10 objects, and we collected 1680 depth and color images. In the second setup, we replicated the same procedure with 20 objects, and we shuffled these objects in the scene 40 times, creating a dataset with 1760 color and depth images. We collected 3340 color and depth images by combining the data from the first and second experiments.

Scene understanding dataset(s). In this setup, we constructed two datasets to perform indoor scene understanding by creating a cluttered scene with 10 and 20 objects. In the first setup, we put 10 objects in front of the robot to capture depth and color images. After obtaining color and depth data of the scene, we randomly shuffled objects’ poses 20 times to capture more variations. This procedure was repeated for all objects in the dataset. To this end, we created 21 different scenes with 10 objects, and we collected 1680 depth and color images. In the second setup, we replicated the same procedure with 20 objects, and we shuffled these objects in the scene 40 times, creating a dataset with 1760 color and depth images. We collected 3340 color and depth images by combining the data from the first and second experiments.